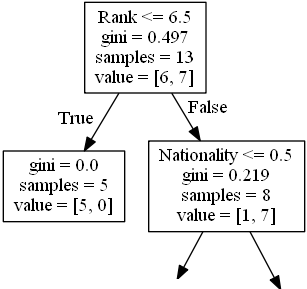

They are also part of information theory. The leaf node consists of the result which is made by using a sample from the data.Įntropy and information gain are the basic functions of the decision tree which helps in the building blocks of the decision tree. In the image, the nodes represent the features of the data which are going to be used to make the predictions and the arrows represent the branches and the decision node linked to the leaf nodes. The image below can represent the block structure of the decision tree. And the final leaf node can not be segregated further. This segregation continues until the leaf node.

When a decision tree algorithm works on the data, it divides the data into different branches and also a branch gets segregated into different branches. So in the structure of the decision tree, three main components take part to form a tree-like structure. As the name suggests, the decision tree is based on the algorithm where it forms a tree-like structure. Because the decision trees are the building blocks of a random forest. How Does the Random Forest Algorithm Work?īefore going to the working of the random forest we are required to know about the decision tree. The scikit-learn provides the algorithm to implement the random forest algorithm with fewer numbers of configurations. Where the random forest is based on the decision tree on the other hand it eradicates the limitation of the decision tree like it reduces the overfitting problem of the model. Increasing the number of trees under the forest can increase the accuracy of the whole algorithm. The accuracy of a random forest is generated by taking the average or mean of the accuracy provided by every decision tree. Bagging or bootstrap aggregating is an ensemble meta-algorithm that improves the accuracy of the machine learning algorithms.įrom the above paragraph, we can assume the performance of random forest depends on the training of the decision trees presented under it.

Where the random forest consists of many decision trees in it and the decision trees are being prepared by the technique called bagging or bootstrap aggregating. The basic concept behind the random forest algorithms is to consist of various classifiers in one algorithm. There are various fields like banking and e-commerce where the random forest algorithm can be applied for decision making and to predict behavior and outcomes. Random forest or random decision forest is a tree-based ensemble learning method for classification and regression in the data science field.

0 kommentar(er)

0 kommentar(er)